AI voice scams use convincing voice clones to trick you into sending money or sharing sensitive information. Since all it takes is a short recording and free software, these scams are now easier than ever to pull off and increasingly common.

To protect yourself, it helps to understand the basics — the methods, the different types, and the warning signs. With that in mind, let’s start by looking at what AI voice scams actually involve.

What are AI voice scams, and how do they work?

AI voice scams involve criminals using artificial intelligence to clone a real person’s voice and make fake calls that sound real. They can seem like an elaborate, high-tech heist, but the idea behind them is actually rather straightforward.

During these calls, scammers apply pressure, induce panic, or establish trust to push their target into acting fast. Whatever angle they take, the goal is usually the same: to steal money or personal information.

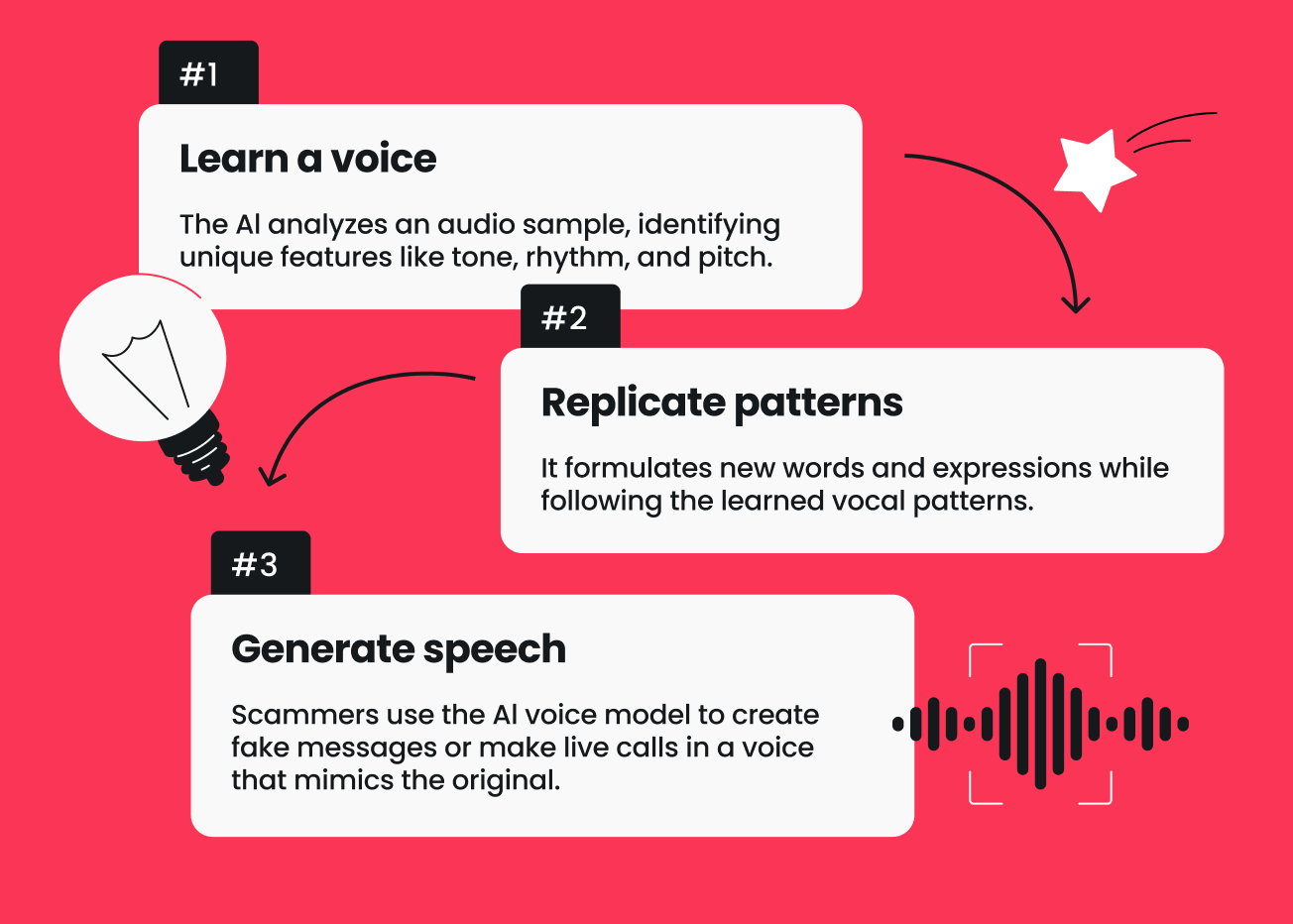

How AI voice cloning works

At the heart of these scam calls that trick many into parting with their money or personal information is AI voice cloning. Simply put, AI voice cloning trains a computer to copy the unique traits of a person’s voice so that it can recreate the voice accurately.

Step 1

It all starts with feeding the AI audio clips of a person speaking. Longer samples are better, but even just a few seconds of audio can give the AI enough to work with.

Step 2

From these snippets, the AI identifies what makes the voice distinctive — picking up on characteristics like pitch and tone, rhythm and tempo, pronunciation, inflection, and more.

Step 3

Once it has learned these patterns, the AI can generate entirely new speech — using words, phrases, and expressions the person never actually said — while still sounding like the original voice.

Step 4

Scammers can then use the voice model to create fake messages or even make live calls that sound eerily real.

Why are AI voice scams rising?

Deepfake-related losses — including those linked to AI voice cloning scams — have surged to $1.56 billion. More than $1 billion of that happened in 2025 alone.

These scams are seemingly mushrooming, and here’s why:

- Free or cheap AI tools: low-cost or free online tools remove the need for sophisticated setups and expensive software;

- Ease of use: most modern voice-cloning programs are simple and user-friendly, allowing anyone to create a clone in minutes — no technical know-how necessary;

- More voice content available: criminals now have a much larger pool of material to work with as people constantly share voice notes, post videos, and use voice assistants;

- Realistic results: modern AI can replicate tone and emotion almost flawlessly, making manipulation more effective;

- Increased visibility: growing public awareness and media coverage push these scams into the spotlight, making each case more noticeable.

How scammers get your voice

With so much content and audio shared online these days, getting hold of your personal info — including your voice — is easier than you might think. Let’s run through some common methods scammers use to collect voice samples.

Social media videos

Social platforms like TikTok, Instagram, and YouTube are the most common hunting grounds for scammers looking for audio samples. Just 5-10 seconds of casual talk about your lunch or weekend plans can be enough to create a clone. Since these clips are natural and usually high quality, scammers can feed them straight into voice-cloning software.

Voicemail greetings

Scammers can also grab your voice from voicemail greetings, either through leaked data or by calling your number and recording it. These clips are pretty handy for bad actors since they capture natural speech and often include a name. Plus, since most people practice and record multiple takes before settling on one, the audio usually needs little to no cleanup.

Interviews, podcasts, and webinars

Public recordings like podcasts, interviews, panels, and webinars are easy for AI to clone. They provide high-quality speech, which helps the AI model to replicate your voice with little effort. These extended recordings also give the AI more material to work with than, say, a 15-second Instagram reel.

Recorded calls from old data breaches

Some bad actors may turn to leaked call center recordings or hacked apps to get voice samples. They buy these clips online, extract the audio, and use it to create clones. Since the recordings are from real calls, the cloned voice sounds natural in similar contexts, making the scam feel more believable.

Direct manipulation

If scammers can’t find your voice online, they go straight to the source and try to get you to speak. They may call while pretending to be a survey company, bank, or delivery service confirming an appointment. Often, they’ll ask questions that force you to answer in full sentences or repeat specific phrases.

Types of AI voice scams

Scammers often cycle through a range of tactics rather than stick to a single trick. Below are the most common types of AI voice scams you’ll see in the wild.

Emergency impersonation (“help me!”) scams

These scams use a cloned voice of someone close to you — a child, partner, parent, or sibling — to fake an emergency. The caller typically sounds frantic, cranking up Acting 101 theatrics with uncontrollable sobbing, shaky whispers, or background noises like sirens and yelling to push you into acting fast.

The storyline is usually familiar as well: arrested, kidnapped, or in an accident. Others play it coy with the ever-vague “I can’t talk freely right now.” No matter the tale, it always ends with the scammer demanding fast payment and that you keep it quiet.

Business and CEO impersonation scams

In these scams, bad actors pretend to be a company executive — usually a CEO, CFO, or senior manager — to trick employees into carrying out “pressing” tasks. The caller usually sounds calm and authoritative while making routine-sounding requests like approving payments, moving funds, sharing login credentials, or sending confidential documents.

The sneakier scammers do their homework and name-drop or slip in internal information scraped from company websites, press releases, LinkedIn, or past breaches to make the request less suspicious. Since the task is framed as top-priority and coming from higher-ups, employees often comply immediately.

Financial, crypto, and investment scams

Scammers clone the voice of someone you’re likely to trust, such as a bank officer, financial advisor, or a public-facing figure like a crypto influencer, in these scams. They might claim there’s an urgent issue with your account, offer “exclusive” investment opportunities, or pitch time-sensitive deals.

To sell the story, they often throw in jargon, fake case numbers, or bogus statistics — painting the situation as urgent or a once-in-a-lifetime opportunity. The goal is to push you into transferring funds, moving crypto, sharing credentials, or approving account changes.

Voice-authentication bypass attempts

Unlike other AI voice cloning scams that target victims directly, these ones go after banks, customer support hotlines, or account recovery services — pretending to be the victim. Here, scammers use cloned voices to answer security prompts, repeat passphrases, or even chat with a human agent to bypass voice-based identity verification.

Since voice checks are rarely used on their own, scammers typically turn to voice cloning only after stealing other personal information, such as usernames and passwords. The cloned voice is the last step that helps them cruise through voice-based security checks.

AI phone-call scams

These scams are entirely fake phone calls where the caller’s voice is fully AI-generated to sound human. You might expect stilted, robotic speech, but modern AI-synthesized voices can be surprisingly natural and conversational. The AI can pause, shift tone, or add filler words just like a real person.

Common angles include claims of unpaid taxes, tech support issues, suspicious account activity, or mounting debts. From the scammer’s side, AI audio makes mass calling easy and flexible. They can make hundreds of calls, and each one can be tailored to its target.

How AI voice scams unfold step-by-step

Different AI voice scams may use different tricks and tactics, but they usually still follow the same basic playbook. Here’s how they typically look in action.

Step 2: They clone the voice using AI tools

Next, scammers feed the audio into voice-cloning software. The AI model then analyzes your vocal patterns like pitch, cadence, pronunciation, and inflection — essentially everything that makes you sound like you. From there, it generates a realistic synthetic version of your voice.

Step 3: They create a believable story

With the cloned voice in hand, scammers move on to designing the scenario and scripting the call — usually something urgent, dramatic, and distressing. Some classic angles include being stranded in a foreign country, arrested for a minor offense, involved in a car accident, or even kidnapped.

Step 4: They call or send a voice message

The message is then delivered via a live phone call, voicemail, or voice note on platforms like WhatsApp or Telegram. Real-time calls crank up the pressure and cut down thinking time while voice messages give the victim the chance to replay the audio, driving the point home. Either way, the familiar voice lowers suspicion and makes the message feel authentic.

Step 5: They ask for urgent money or sensitive data

The final stretch is the info or money extraction. This is when scammers push for immediate action like transferring funds, sharing login credentials, or sending confidential files. Urgency is the secret sauce at this stage, with victims told there’s no time to verify, call back, or even think things through.

Signs that a call might be AI-generated

AI-generated voice calls aren’t always easy to sniff out, but they do tend to have subtle inconsistencies that give them away.

Here are some signs a call may be AI-generated:

- Strange pauses or unnatural rhythm: pauses or start-stops that feel abrupt, misplaced, or calculated;

- Perfect pronunciation without emotion: the voice remains impeccably clear with little to no trembling or breathiness in supposedly emotional moments;

- Overly formal or robotic tone: the caller avoids slang and contractions, giving the impression of trying a little too hard to sound normal;

- Mismatched background noise: crackling static, looped audio, or total silence, even as the caller claims to be moving around;

- Delayed replies: short, consistent delays before each reply, even when the speech is otherwise fluent;

- Pressure, urgency, or secrecy: the caller demands immediate action, discourages any form of verification, and insists on secrecy;

- Refusal to switch to video: the caller dodges live video, refuses call-back verification, or balks at third-party confirmation with creative excuses like a broken camera.

How to protect yourself from AI voice scams

AI voice scams count on you acting fast and without thinking things through. The best defense is to stay calm, verify requests, and make full use of security tools.

Create a family safe word

A simple but effective way to verify identity is to use a shared safe word known only to close family members. If someone calls claiming to be in trouble, they must provide the secret word before you jump into action. Make sure to choose a word or phrase that’s hard to guess and not publicly available online — obvious choices like street names or pet names won’t cut it.

Verify through another channel

Don’t just go off a single call or voice message, even if the voice sounds familiar. Instead, take a moment to actually cross-check and verify the caller’s identity. Take a deep breath and hang up, then text, video call, or phone the person directly to confirm the call is genuine. Even the most urgent request will survive a quick check.

Limit how much of your voice you post online

Be selective about what you share on TikTok, Instagram, and similar platforms. Clear, uninterrupted speech — like long monologues or Q&As — is prime material for fraudsters. That said, even short clips, stories, and reels can be scraped, manipulated, and reused. It’s safest to assume anything public can be copied, so set your profiles to private or limit followers to those you know.

Don’t answer unknown calls with long greetings

Don’t open your call with scripted introductions like “Hello, this is [your name] from XYZ company speaking.” Better yet, let unknown callers make the first move and speak before you. Otherwise, malicious actors could record these openings and reuse them to train or fine-tune voice clones.

Strengthen your account security

You might not be able to completely prevent fraudsters from copying your voice, but you can reduce the potential damage a cloned or misused voice can cause by shoring up your account security.

Start with these basic steps:

- Enable MFA (Multi-factor Authentication) — especially for vital accounts like email, banking, and cloud services — to add another layer of verification;

- Avoid voice-based authentication whenever possible or add an extra verification step to confirm identity;

- Use strong, unique passwords by combining upper and lowercase letters, numbers, and special characters, and use a password manager to store them securely.

Use security tools for extra protection

No tools can block AI voice scams entirely, but the right ones can help minimize potential fallout. For an all-in-one security and privacy solution, check out Surfshark One.

Here’s how it can provide an extra layer of protection:

- Surfshark Alert: notifies you if your personal info appears in data breaches or leaks, helping you stay on guard against voice scams that might exploit your data to sound believable;

- Surfshark Antivirus: helps block malware, phishing links, and spyware that scammers might use alongside AI voice scams to steal your accounts or personal info;

- Surfshark VPN (Virtual Private Network): encrypts your internet traffic and masks your IP (Internet Protocol) address, making it harder for scammers to target you or abuse your data to seem more convincing.

What to do if you’ve been targeted

If you suspect you’re dealing with an AI voice scam, act fast to shut it down and minimize the damage.

Stop communicating

First, end the call or stop replying immediately, even if the person sounds urgent or desperate. Don’t bother explaining, arguing, or testing them — scammers count on keeping you engaged so they can pile on the pressure and extract more information or money. Any extra interaction only gives them more opportunities to exploit you.

Verify the person’s identity

If something sounds even slightly off, take it as a sign that you should go to the source directly. Verify through a separate, trusted channel: call the person on a number you already have, message them through an existing chat, or use the official website or app. For banks or similar institutions, a quick in-person visit is the safest.

Report the scam attempt

Let the platform where the scam occurred know — whether that’s your phone carrier, messaging app, social network, or email provider. For scams involving financial information, threats, or impersonation, contact your local law enforcement or consumer protection agency. In the US, the FTC (Federal Trade Commission) and the FBI’s IC3 (Internet Crime Complaint Center) handle these reports.

Change security settings if needed

If you shared any info — even minor details like names — during a suspicious interaction, assume it could be used against you. Take the initiative to secure your accounts: change passwords, enable 2FA (Two-factor Authentication), review recent login activity, and remove any unfamiliar devices. If banking or payment details came up, contact your bank to flag potential fraud.

Activate breach monitoring tools

Tools like Surfshark Alert let you know if your personal information ends up online after a data leak incident. This means you can promptly take necessary actions like changing compromised passwords or enabling 2FA. It also helps you stay ahead of AI voice scams that might try to use your leaked info to pass off as the real deal.

AI voice scams in the news

From real-world cases to artificial intelligence experts raising alarms, AI voice scams are a regular fixture in the news.

Let’s run through some ways AI voice scams made headlines recently.

Colorado parent scam

A woman in Colorado, US, received a frantic AI-generated call that sounded like her daughter. The caller, claiming her daughter had been abducted, demanded $2,000 for her release. After sending the money, the mother learned that the daughter was safe at home all along.

US officials alert

The FBI has issued an alert about scammers impersonating senior US officials using AI-generated voice and text messages. These fraudsters try to build trust first before persuading victims to share access to their personal or official accounts.

Italian ransom scam

Fraudsters mimicked Italian Defence Minister Guido Crosetto’s voice to call prominent business figures, claiming kidnapped journalists in the Middle East needed urgent ransom payments. Massimo Moratti, former owner of soccer club Inter Milan, reportedly sent nearly €1 million across two transactions.

Industry concern

OpenAI CEO Sam Altman has voiced his concern that financial institutions still accept voice prints for authentication. He highlighted a growing “fraud crisis” as AI makes it incredibly easy for malicious actors to impersonate others.

Tips to reduce your digital voice footprint

Once your voice is online, you have very little say over where it ends up and how it’s used. That’s why the best move is to limit how much of your voice is publicly available.

A few simple habits can help:

- Limit audio content on social media: avoid posting videos where your voice is clearly isolated, and opt for captions or background music instead

- Simplify voicemail recordings: switch to a generic greeting or keep custom messages short, neutral, and free of emotional cues or identifying details;

- Clean up old content: check past uploads on platforms like Instagram, TikTok, or YouTube and delete, archive, or restrict anything with clear, uninterrupted audio;

- Tighten privacy settings on social apps: limit who can view, download, share, or remix your content and disable search engine indexing.

Don’t fall for a fake voice

AI voice scams are extremely tricky as scammers can imitate real voices with shocking accuracy with just a few seconds of samples and free online tools. That’s why it’s more important than ever to stay cautious and keep an eye out for warning signs.

Better yet, take extra precautions to protect yourself and minimize potential damage. Use safe words, keep your voice off public sites, and double-check calls from unknown numbers. For added protection, consider Surfshark One — which includes Surfshark Alert, Antivirus, VPN, and more.

FAQ

Are AI voice scams real?

Yes, AI voice scams are very real and increasingly common. Victims may face serious consequences such as financial loss, identity theft, and compromise of sensitive information.

How can you tell if a voice is AI or real?

It can be very difficult to tell if a voice is AI-generated or real. Your best bet is to look out for signs like a robotic tone, abrupt start-stops, awkward pauses, or a complete lack of emotion. If something feels off, that’s your cue to take a step back and double-check.

How do you protect yourself from AI voice scams?

To protect yourself from AI voice scams, use safe words with family and friends to confirm identities during calls or messages. Additionally, always verify suspicious calls through another trusted source or channel. You can also add an extra layer of protection with security tools like breach monitoring, antivirus, and a VPN.