digital democracy|artificial intelligence

One in five AI incidents relates to elections

2024 is a year of elections¹, so let's explore how it has impacted AI incidents so far. An AI incident can be defined as an event or situation where an artificial intelligence system causes or contributes to harm or the potential for harm to individuals, organizations, or society. This week’s chart focuses on election-related AI incidents over the past year, specifically examining how many of these incidents involve prominent US election candidates like Joe Biden and Donald Trump.

Key insights

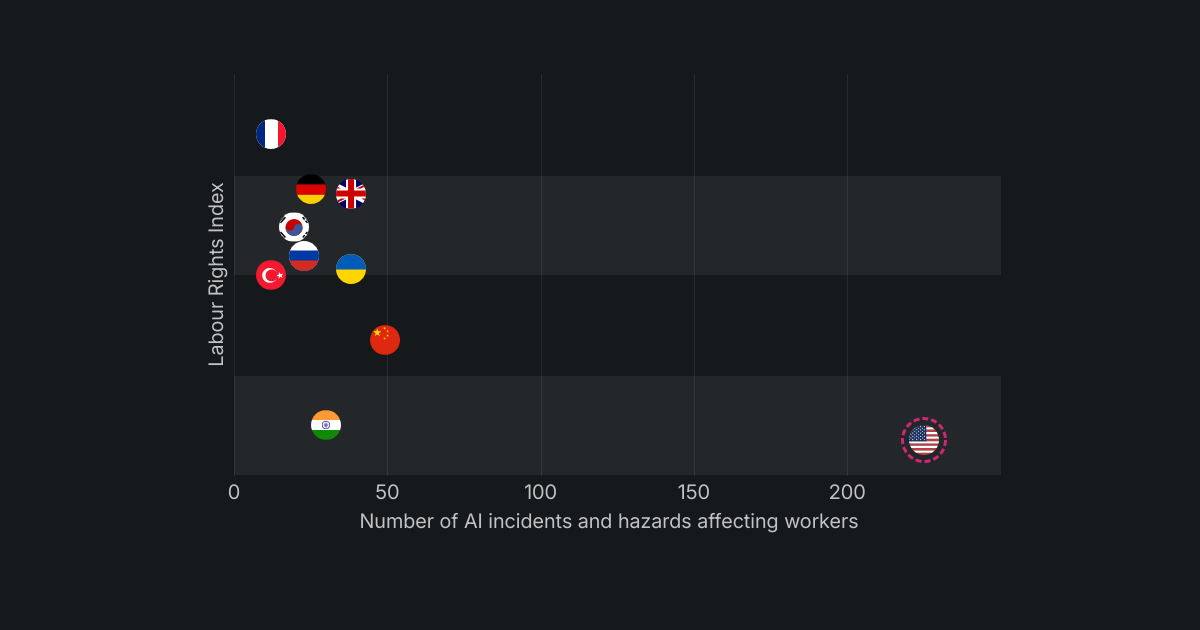

- Over the last year, 121 AI incidents were recorded, with more than a fifth (27 incidents) concerning elections. In the first six months of the analyzed period, there were 6 election-related incidents (12% of all incidents during this period), while the second half of the year saw 21 election-related incidents (accounting for 30%). February recorded the highest number of election-related incidents, with 8 registered, including 2 incidents related to Pakistan's election that month. To view the month-over-month trend of election-related incidents, please click on the gray square below the chart.

- A third of election-related incidents involve current US President Joe Biden and the Republican candidate for the upcoming elections, Donald Trump. Joe Biden was involved in 2 incidents, while Donald Trump was mentioned in 6 incidents (3x more). For instance, there was also one incident caused by the AI Image Creator, part of Microsoft’s Bing and Windows Paint, which generates extremely violent images involving both current and former presidents and other important figures, such as Hilary Clinton².

- The most common format of AI-generated content is video. Deepfake videos account for half of the election-related incidents. The second most common format is audio, followed by images.

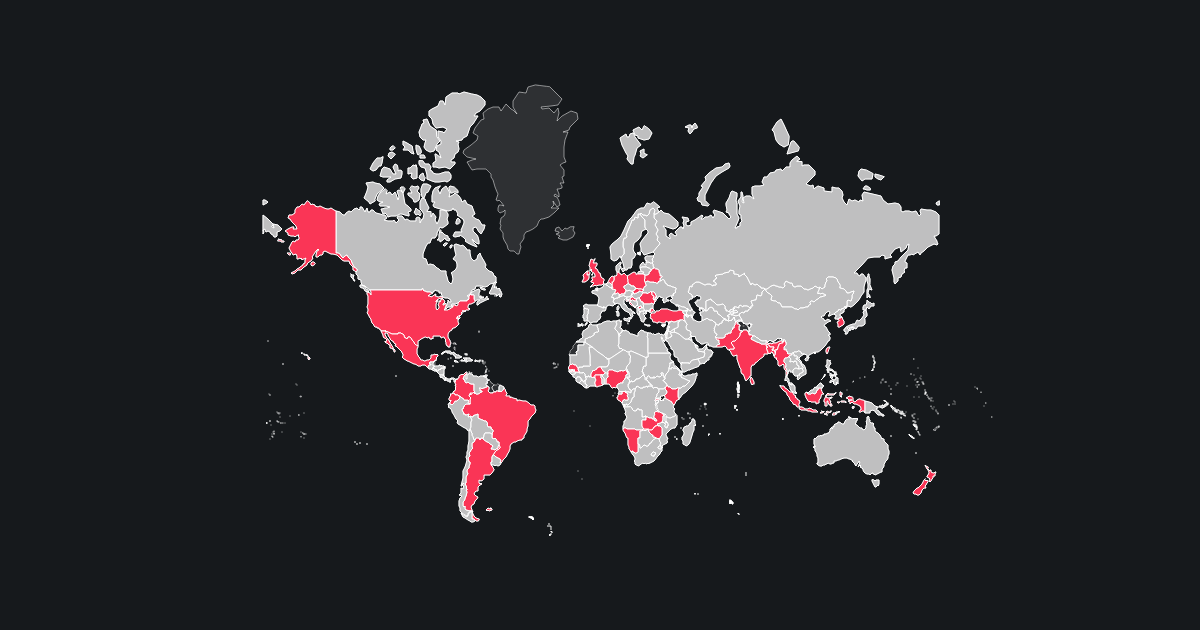

- Who deployed AI systems causing an accident? A fifth of election-related incidents were caused by systems deployed by deployers associated with Russia or China. Half of these incidents involve US entities as harmed parties. However, threats can also originate domestically. For example, in early March, supporters of Donald Trump shared AI-generated images depicting him with Black voters in an attempt to influence African-American votes³.

Methodology and sources

We retrieved data on AI incidents from June 1, 2023 to May 31, 2024, from the AI Incident Database on June 6, 2024. For this study, we classed incidents into election-related and non-election-related, separating incidents involving Donald Trump and Joe Biden by searching for keywords “voter”, “election”, “president”, “biden”, and “trump” in incident title, description, and alleged harmed or nearly harmed parties.

In the AI Incident Database, a "deployer" refers to an entity or organization responsible for implementing or putting into operation the AI systems involved in an incident.

For the complete research material behind this study, visit here.